Study Finds Eye Gaze Signals Provide Key Answers in Assistive Device Control

Our eyes are powerful communicators, and they’re even more vital when other muscles stop working. For example, in the final stages of amyotrophic lateral sclerosis (ALS), many people can’t move their arms or legs, but they can control their eyes. However, few technologies exist to help these people move around easily.

Researchers with the Center for Advancing Safety of Machine Intelligence (CASMI) are hopeful their study will lead to a solution. Using eye-tracking technology, they gathered data about eye gaze signals, with help from 11 people with disabilities, and concluded that it’s likely assistive devices can be designed to allow for smoother control. Their findings will be published at the 2023 Institute of Electrical and Electronics Engineers (IEEE) International Conference on Rehabilitation Robotics (ICORR) in a paper entitled, “Characterizing Eye Gaze for Assistive Device Control.”

The study is part of the CASMI-funded research project, “Formal Specifications for Assistive Robotics.” The team’s members are Principal Investigator Brenna Argall, Northwestern University associate professor of computer science, mechanical engineering, and physical medicine and rehabilitation; Todd Murphey, Northwestern professor of mechanical engineering; Larisa Loke, Northwestern PhD student in mechanical engineering; Hadas Kress-Gazit, senior endowed professor at the Cornell University Sibley School of Mechanical and Aerospace Engineering; and Guy Hoffman, associate professor at the Cornell University Sibley School of Mechanical and Aerospace Engineering.

“The main focus of our work was to see whether there are further signals for more information that we can use to achieve continuous control,” said Loke, who will become a fourth-year PhD student in the fall. “This study is like a pilot. We collected some data to see what we have and to see whether we can do anything with it.”

“The main focus of our work was to see whether there are further signals for more information that we can use to achieve continuous control,” said Loke, who will become a fourth-year PhD student in the fall. “This study is like a pilot. We collected some data to see what we have and to see whether we can do anything with it.”

Next, Kress-Gazit will use these findings to produce formal guidelines for eye-gaze-based devices.

“If successful, we would have a first example of specifications related to this human-computer interaction system,” Argall said. “The long-term goal is to empower stakeholders, like end-users, their caregivers, and their clinicians, to design interfaces that allow for interaction with assistive technology.”

How Researchers Made Their Findings

Researchers recruited 11 people for the study. Ten of them have a spinal cord injury, and one has ALS.

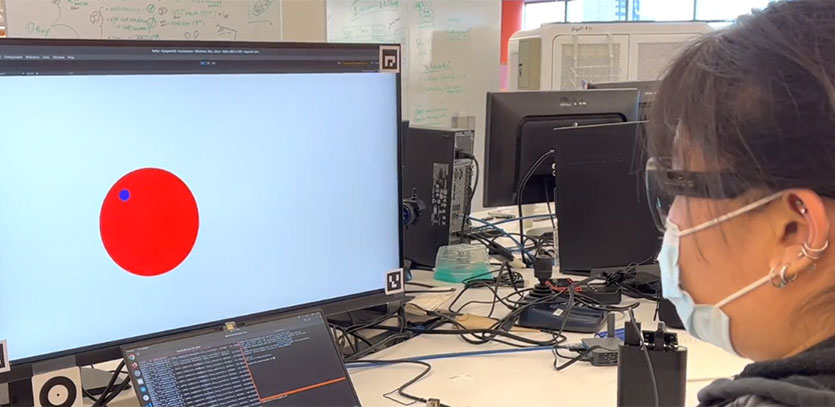

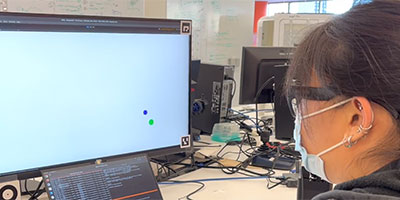

Study participants wore eye-tracking research glasses, which are fitted with tiny cameras and “mirrors” that read what the eyes are doing. The participants performed four timed tasks in front of a screen. The first measured their ability to “paint” the screen using their eyes. The next two tasks asked them to focus on circles that randomly appeared on the screen. The final task tracked how well they could follow moving targets with their eyes.

Results varied, but researchers did find some similarities. Study participants performed better when they had visual feedback, or a dot indicating where their gaze was focused. However, people tended to take longer to complete tasks with visual feedback.

The study said interfaces should be customized to align with the participants’ skills and comfort levels.

“There would be specific areas where people have more fidelity,” Loke said. “They would be able to give finer control in those areas because their eyes tend to prefer to live in those areas of the screen.”

“There are pathways to personalization that make sense to pursue,” Argall said. “Lots of times, when you’re doing this sort of research, what you boil things down to are a couple of salient nobs that the end-user should be able to turn. That allows them to customize things well for themselves. Part of this research is figuring out what those nobs should be.”

The Significance of This Work

Federal data shows that decreased mobility is the most common type of disability in the US, and an estimated 3 million Americans use wheelchairs.

Many powered wheelchairs only allow users to control one function at a time. This can result in choppy motions. Argall said eye-gazing devices are difficult to interpret, but they provide a very rich signal.

“There is a lot of information we can encode in our eye movements, but it’s really subject to interpretation,” she said. “You really want to extract as much information as you can from it.”

Researchers plan to use this preliminary data to build tools that can be shared with people who are not roboticists or computer science experts.

Loke said she’ll incorporate these findings into a larger project she is working on in the assistive & rehabilitation robotics laboratory (argallab) at the Shirley Ryan AbilityLab, a rehabilitation hospital in downtown Chicago. The goal is to improve how signals from interfaces are being interpreted.