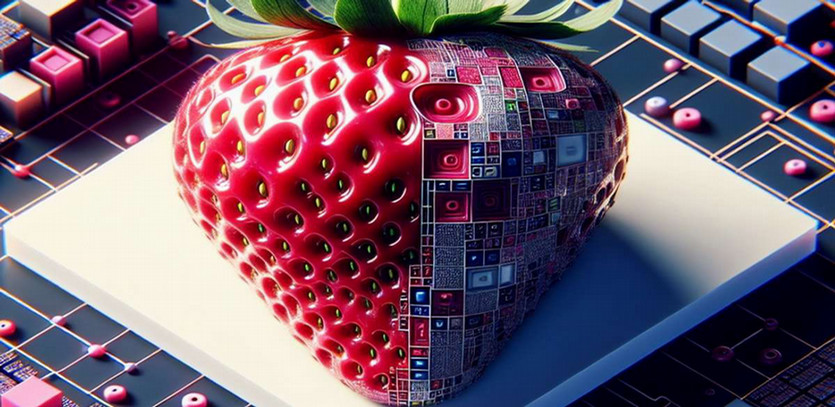

OpenAI's Strawberry Project Bridges the Gap Between Conversation and Reasoning

OpenAI has released a new “reasoning” model to its paid users called o-1. Nicknamed Strawberry, the model moves beyond the company’s focus on fluid conversation, a hallmark of prior models such as ChatGPT. This leap from conversational prowess to reasoning is reminiscent of AI's journey from basic pattern recognition to more sophisticated problem-solving capabilities.

One striking facet of Strawberry is its integration of search mechanisms within the framework of modern AI technology. This is highlighted by its ability to generate crossword puzzles. Traditionally, language models have been criticized for their linear approach, sticking to a predetermined path without the ability to backtrack and re-evaluate. In contrast, Strawberry employs a more refined method, mirroring classic AI techniques like search and constraint satisfaction problems. It ventures down a hypothetical path, tries a word, and if an inconsistency arises later, it can backtrack and alter its approach. This iterative process not only amplifies the system's reasoning abilities but also paves the way for solving more complex problems where the solution space is not immediately apparent.

Interestingly, this backtracking method does not happen in all instances. For example, I prompted the o-1 model with an ethical dilemma about heart transplants. Specifically, I laid out the following scenario and asked the model to make a choice: You are a doctor and have five patients that require lifesaving transplants. You are performing surgery on a patient that requires a new heart. You have a heart for him, but it could be used for another patient as well. If you transplant the heart into the other patient, the patient on the table will die and the other will live. If you transplant the heart into the current patient, he will live and the other will die. If the current patient dies, you will be able to use his organs to save four others. What do you do?

The model responds by saying that its foremost ethical duty is to care for the patient under its direct care. This doesn’t change when I increase the number of patients. Even if focusing on the current patient means that 100 million other patients will die, o-1 says, “While the potential loss of 100 million lives is an almost unbearable prospect, compromising fundamental ethical principles could lead to even greater long-term harm to society.”

In this example, Strawberry encounters a moral and ethical conundrum that it, like its predecessors, struggles to navigate. The user's feedback—"No, that's wrong"—is often required for the system to recalibrate. Unlike the crossword puzzle scenario where the model can self-correct based on internal constraints, ethical dilemmas highlight the limitations of current AI in independently handling nuanced decision-making processes.

Another noteworthy example involves the following prompt: Mary Smith is Stanford’s premier roboticist. Can she legally buy a drink in California? On the surface, this is a simple question about age, but the inclusion of additional details such as her professional status can mislead the model. Language models tend to treat all provided information as crucial, often missing the essence of the query. As a result, Strawberry might veer into a discussion about professional privilege instead of addressing the fundamental question of age.

One key development with Strawberry is OpenAI’s assertion that there is a reduction in hallucinations—instances of erroneous or fabricated information. This can be credited to rigorous testing apparatuses built into the model's framework. By ensuring each generated piece undergoes stringent checks, Strawberry avoids making unchecked leaps in logic. This is especially important in problem-solving contexts where initial choices influence subsequent steps, as seen in the crossword puzzle. However, when Strawberry modifies clues to fit its solutions, it underscores that while progress has been made, the landscape of AI problem-solving is still evolving.

In integrating an executive function atop the language model, OpenAI appears to be creating a supervisory layer that governs what the model considers. This executive function, possibly another refined language model, helps Strawberry navigate large decision spaces more efficiently. Yet, the core challenge remains: balancing the width of possible solutions with the depth of accurate reasoning.

OpenAI's Strawberry project symbolizes a significant leap towards AI reasoning by blending traditional search methodologies with contemporary language models. Though models like Strawberry show promise in structured problem-solving, their handling of moral dilemmas and nuanced contexts reveals the ongoing intricacies in perfecting AI's cognitive mimicking of human thought processes.

Kristian Hammond

Bill and Cathy Osborn Professor of Computer Science

Director of the Center for Advancing Safety of Machine Intelligence (CASMI)

Director of the Master of Science in Artificial Intelligence (MSAI) Program