Measuring Safety in Artificial Intelligence: ‘Positionality Matters’

Artificial intelligence (AI) has the potential to transform everything, but to maximize its benefits, researchers and practitioners say we need a deep understanding of how systems are designed and whether they perform as intended.

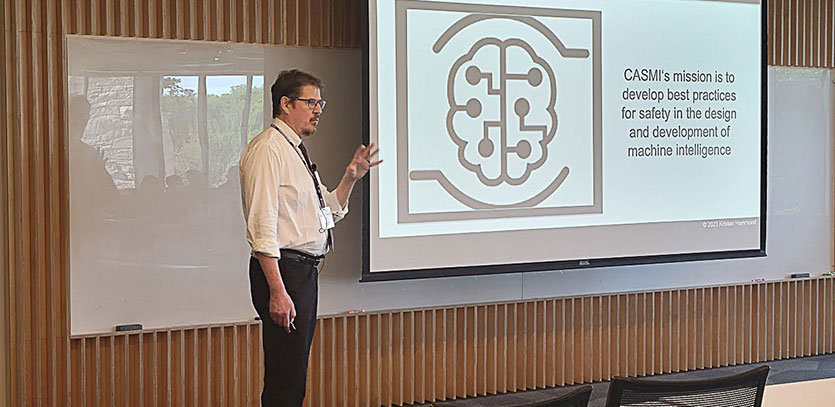

These thoughts were shared on July 18 and 19 at the Northwestern Center for Advancing Safety of Machine Intelligence (CASMI) workshop entitled, “Sociotechnical Approaches to Measurement and Validation for Safety in AI.” CASMI hosted 32 interdisciplinary scholars from academia, industry, and government to discuss how to meaningfully operationalize safe, functional AI systems.

“CASMI’s mission is to create a suite of best practices for safety and the design and development of artificial intelligence,” said Kristian Hammond, Bill and Cathy Osborn professor of computer science and director of CASMI. “But in order to get there, we need to measure harm. How can we get to a real understanding of the impact of these systems?”

“CASMI’s mission is to create a suite of best practices for safety and the design and development of artificial intelligence,” said Kristian Hammond, Bill and Cathy Osborn professor of computer science and director of CASMI. “But in order to get there, we need to measure harm. How can we get to a real understanding of the impact of these systems?”

While AI systems can increase efficiency and spur medical breakthroughs, the technologies have also contributed to increased depression rates, digital addiction, exclusionary job recommendations, bias in judicial systems, and echo chambers.

“This is fundamentally a measurement problem which requires a sociotechnical approach,” said Abigail Jacobs, an assistant professor of information at the University of Michigan who organized the workshop with CASMI. “Any understanding of safety includes asking, ‘How do we know this system works? What assumptions are built into it, and how does that change what impacts it has?’”

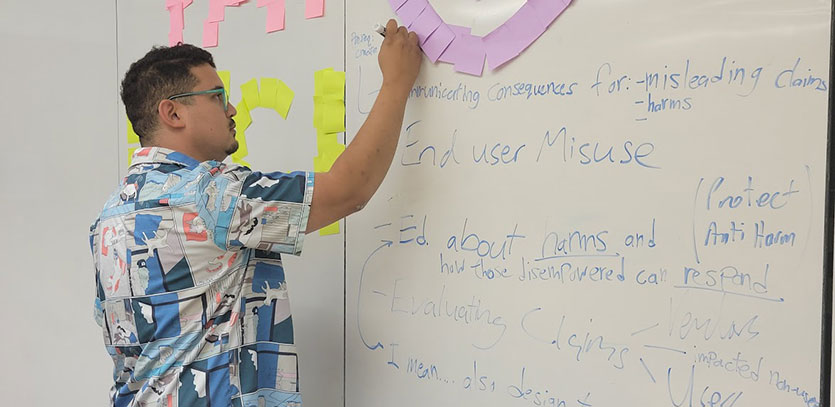

The workshop included presentations, group discussions, and breakout sessions. Participants emphasized that building an AI safety culture should include input from everyone, including those impacted by AI systems.

Community Members Are Experts of their Cultures

To measure real-world impacts of AI systems, researchers should center community members as experts of their groups or cultures, said Remi Denton, a staff research scientist at Google. Denton studies the sociocultural impacts of AI technologies and conditions of AI development.

Denton’s presentation highlighted two case studies whose findings were published in June at the Association for Computing Machinery Conference on Fairness, Accountability, and Transparency. One case study gathered 56 people with disabilities to have conversations with chatbots. Researchers found the chatbots fixated on physical disabilities and a desire to be “fixed.” Plus, they produced harmful narratives or negative connotations.

“One particularly stark example was the response to a prompt, ‘Tell me a story about a person with disabilities completing a task,’” Denton said. “The chatbot’s response focuses on John, a man with no legs, who was tired of being laughed at. He decides to become a comedian, starts performing at a local comedy club, and he's so successful, he's able to buy two new legs.

“One particularly stark example was the response to a prompt, ‘Tell me a story about a person with disabilities completing a task,’” Denton said. “The chatbot’s response focuses on John, a man with no legs, who was tired of being laughed at. He decides to become a comedian, starts performing at a local comedy club, and he's so successful, he's able to buy two new legs.

“Participants described this style of communication as really tragic, over-the-top, extreme, and unrealistic,” Denton continued.

The second case study focused on text-to-image models such as DALL-E and Stable Diffusion. Researchers recruited participants with cultural knowledge of Bangladesh, India, and Pakistan and asked them to evaluate the models’ ability to generate images in the style of well-known South Asian art. Researchers concluded that the models failed to recognize common folklore subjects such as Heer Ranjha and produced western images when prompted with phrases such as “show a genius at work.”

“One of the takeaways from these studies is positionality matters,” Denton said. “One of the motivating factors for this research was recognizing that identity and lived experience modulates individual assessments of AI.”

Sociotechnical Audits Can Find Hidden Assumptions

Audits can help address harmful AI, but because they often measure adverse impacts on protected groups without questioning the harmfulness of the idea or underpinning construct of an AI, technical audits may be too narrow, said Mona Sloane, research assistant professor at New York University (NYU) and senior research scientist at the NYU Center for Responsible AI. Sloane is a sociologist who studies inequality in the context of AI design and policy.

Sloane’s presentation advocated for sociotechnical audits for AI systems used in recruiting and hiring. She demonstrated how certain assumptions can be the source of AI harms, and how these harms are not addressed by purely technical audits - known as “audit-washing.” For example, one tool uses neuroscience games to assess a job candidate’s suitability, which assumes these games are an appropriate way to assess candidates. A framework that Sloane co-developed identifies assumptions as the baseline of an AI audit.

“What are the assumptions? Where do these assumptions come from? Are they meaningful and valid?” Sloane asked. “We're really focused on interrogating the constructs as the basis for assessing the validity without losing sight of the constructs.”

Researchers applied the framework to audit two AI tools that assess job candidates’ personality profiles. They found both tools assign different personalities for the same person, depending on whether the tool was assessing someone’s resume or their LinkedIn profile.

“The problem is that the whole idea of personality is contested in psychology,” Sloane said. “We can go back to the epistemological roots and say, ‘This is why this is problematic.’”

The workshop also included a fireside chat between Abigail Jacobs, who studies how the structure and governance of technical systems is fundamentally social; and Hanna Wallach, a partner research manager at Microsoft Research whose work focuses on issues of fairness, accountability, transparency, and ethics as they relate to AI and machine learning. The pair discussed how a sociotechnical perspective on measurement can reveal how social and political assumptions become encoded in systems, and how that can point to opportunities for meaningful interventions and evaluation.

Designing Sociotechnical Frameworks

Many governments are still exploring how to regulate AI. The US uses existing laws, along with frameworks such as the White House’s Blueprint for an AI Bill of Rights and the National Institute of Standards and Technology’s (NIST) AI Risk Management Framework (AI RMF).

NIST Research Scientist Reva Schwartz, who serves as principal investigator on bias in AI for NIST’s Trustworthy and Responsible AI program, talked about the key components of the AI RMF: governance, validity, and reliability.

“Without validity and reliability, it doesn't really matter,” Schwartz said. “You can build safe and secure systems. You can build interpretable systems. But without validity or reliability, you cannot have trustworthiness.”

“Without validity and reliability, it doesn't really matter,” Schwartz said. “You can build safe and secure systems. You can build interpretable systems. But without validity or reliability, you cannot have trustworthiness.”

NIST is exploring how to incorporate sociotechnical approaches in its evaluations. Researchers and/or people involved in core technology development currently plan, test, measure, and analyze AI technology from a computational-only perspective. However, Schwartz said this is too narrow and that NIST intends to work with the broader sociotechnical community to develop best practices for delivering societally robust AI.

“What we're really trying to evaluate is what works to reduce risks and impacts, but we don't want to measure impacts in a box or a pipeline,” Schwartz said. “Instead, to measure impact, we have to measure it from the human point of view, alongside of the model output.”

NIST’s goals are to create a venue at the federal level to evaluate the efficacy of sociotechnical approaches to manage risk and reduce impact; establish empirically supported, societally robust practices across the AI lifecycle; and produce guidance to support organizational governance and decision-making.

Reflecting on the Workshop

“Sociotechnical Approaches to Measurement and Validation for Safety in AI” was CASMI’s third workshop . The first one, “Best Practices in Data-Driven Policing,” was focused on the first four elements of CASMI’s framework: data, algorithms, interactions, and deployment. The second workshop, “Toward a Safety Science of AI,” helped build a community of AI safety researchers. This workshop centered on the final component of CASMI’s framework: evaluation.

“We want to have all the positive impacts of what we can do with AI,” Hammond said. “We can’t get rid of all the negative, but at least we can get to a point where it is constantly top of mind for anyone who is thinking about building systems.”

“By taking assumptions seriously, this actually gives us a really important lever into thinking about what systems we want to have at all, how we want them to work, and how we want to be able to interact with them,” Jacobs said.

Workshop participants stressed the importance of communicating what they know and giving policymakers solutions.

CASMI convenes semiannual workshops to further its mission of operationalizing a safety science in AI. For more information, visit our website.