Researchers Pursue ‘Holy Grail’ to Measure Machine Learning’s Impact on People

Researchers with the Northwestern Center for Advancing Safety of Machine Intelligence (CASMI) have created a Human Impact Scorecard to assess and to demonstrate an artificial intelligence (AI) system’s impact on human well-being.

A team led by Ryan Jenkins, associate professor of philosophy at California Polytechnic State University, scoured through scholarly works to quantify machine learning’s impact on people. They spent months researching other areas, like economics and policy, to learn what drives quantitative decision-making.

“What we’re after is a holy grail,” Jenkins said. “It’s been viewed as impossible. We’ve gotten a clear idea of the challenges, which paints the way forward to meet those challenges. The scorecard represents our thinking about the best we can do right now.”

Jenkins is the principal investigator for the CASMI-funded project, “Exploring Methods for Impact Quantification.” He hopes the Human Impact Scorecard can be offered as a datasheet that companies could use to document an AI system’s effects.

“When you publish a product, you’re upfront about weaknesses, drawbacks, and potential risks,” Jenkins said. “We have widely used measures for that in other domains – but not in AI, yet. That is what the scorecard is. It’s standing on the shoulders of that work.”

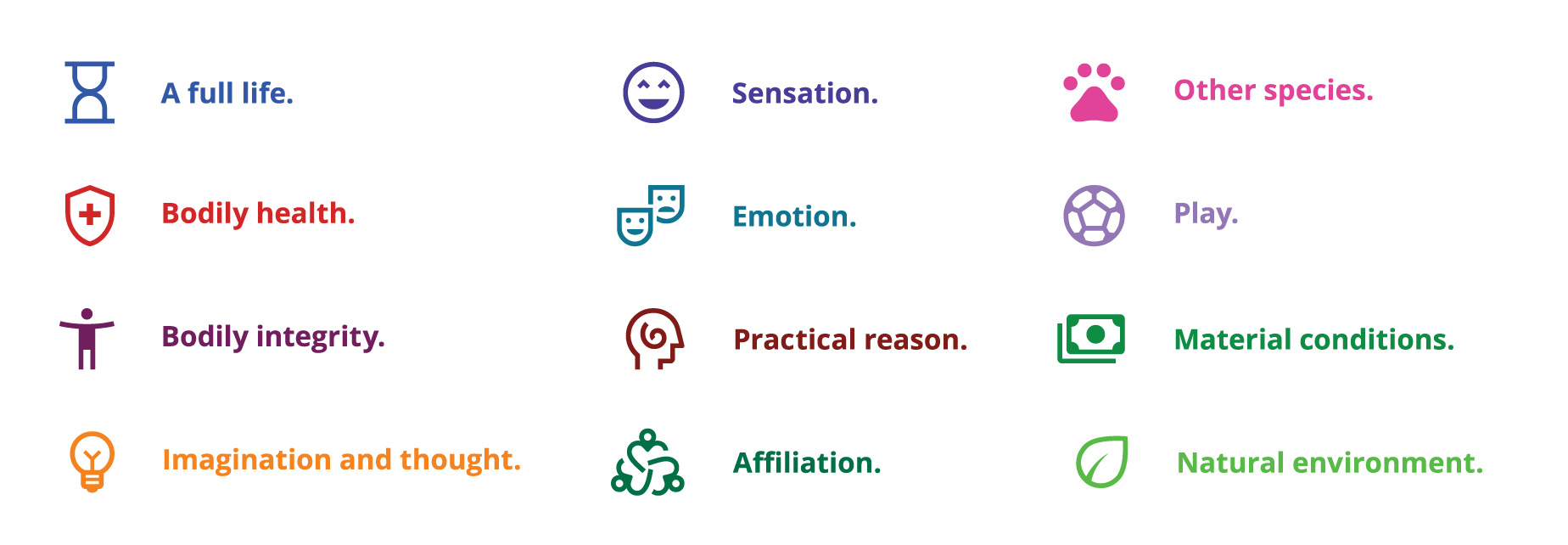

Here’s how it works: the scorecard has a list of 12 dimensions for human well-being, inspired by the work of philosophers Martha Nussbaum and Amartya Sen. The prototype is pictured below:

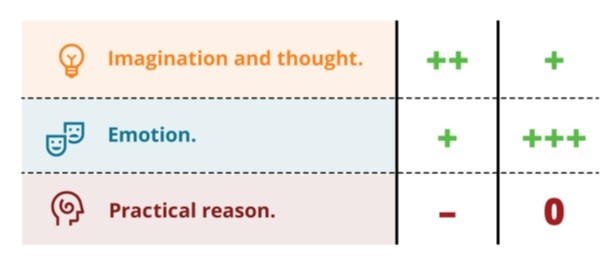

When using the scorecard, one must ask: “which dimensions are most relevant to the machine learning (ML) app?” For example, let’s say an app directs users to nearby parks when they are on long driving trips. In this case, the most appropriate dimensions to measure would be bodily health, play, and natural environment. The dimensions are measured using any metrics that can help quantify the app’s effect. An example is illustrated below:

In some cases, giving a numerical score is not possible. In these instances, the scorecard will simply give a plus or minus score. This is shown below with an example of the scorecard being used to compare two ML apps:

“Having a tool like this would facilitate analysis at many different stages of the ML development pipeline,” Jenkins said. This includes when someone is training a model or during product procurement. It can even help consumers make informed decisions about which apps they want to use.

Jenkins’ research has involved working with consultants in computer science and AI. Lorenzo Nericcio, philosophy and humanities lecturer at San Diego State University, worked as a researcher on the project, and Lulu Savageaux, third year philosophy major at Cal Poly, was a research assistant.

“I think the surprising thing we've found is that, though there's a lot of work in this field, there isn't as much work done on how one could make ethically ecumenical, generalizable AI evaluation tools that are agile enough to work in different industries,” Nericcio said. “It's fun to be doing work that appears quite novel.”

“I think the surprising thing we've found is that, though there's a lot of work in this field, there isn't as much work done on how one could make ethically ecumenical, generalizable AI evaluation tools that are agile enough to work in different industries,” Nericcio said. “It's fun to be doing work that appears quite novel.”

“Most of what I found regarding the human impact of AI algorithms focused on the negative, concerning, and almost dystopian implications of employing these systems,” Savageaux said. “While I think it is incredibly important to consider the harmful impacts of AI, the innovations it has made in city planning, health care, environmental design, and many other domains is equally crucial to the creation of the Human Impact Scorecard.”

“Most of what I found regarding the human impact of AI algorithms focused on the negative, concerning, and almost dystopian implications of employing these systems,” Savageaux said. “While I think it is incredibly important to consider the harmful impacts of AI, the innovations it has made in city planning, health care, environmental design, and many other domains is equally crucial to the creation of the Human Impact Scorecard.”

Jenkins’ work has focused on trying to bridge humanities and sciences in a practical, concrete way. He believes a team of ethicists is essential to develop fair systems. He emphasizes the importance of moving to a place where ethics is front and center throughout development.

“It’s no longer OK to plead ignorance to these issues,” Jenkins said. “This kind of work will help the whole industry move forward ethically.”